René Vidal develops the techniques that will enable computers to tell a tiger from a tree—and learn from what they see.

Browse along the shelves of your local movie rental shop, and you’re sure to find them. From the 1926 classic Metropolis down to the present-day Star Wars, sci-fi films for decades have cast robots in leading roles, capturing the public’s imagination along the way. However, for René Vidal, the possibility of creating a fully autonomous robot may not be as far off as it seems. “I think it’s hard but feasible,” he says, “maybe within the next 20 to 40 years. It’s a matter of putting perception, action, and learning together.”

While robotics researchers are engaged with studies ranging from neural networks to voice recognition, Vidal focuses on the science of seeing, specifically at the intersection of computer vision, machine learning, robotics, and control. The Whiting School of Engineering professor has joint appointments with Biomedical Engineering, Computer Science, and Mechanical Engineering. As he puts it, “The main motivation of my research is to gain an integrated understanding of a class of vision, robotics, and control problems that I believe will enable the development of the next generation of intelligent machines.”

Vidal, a native of Chile, began his academic career in electrical engineering; however, the robotics bug bit him early on. While completing his PhD in electrical engineering and computer science at the University of California, Berkeley, he began investigating the issues involved in vision and control. “My first project was to have a helicopter fly autonomously and land on a moving platform,” he recalls. “So we developed the necessary vision algorithms so that the helicopter, using its onboard camera, could ‘figure out’ its position and orientation relative to the landing target. This work made me realize that vision is a lot more challenging than I thought.”

Taking up the challenge, Vidal pursued those studies, which led him to his appointment at the Whiting School in January 2004, and placement as a member of its highly regarded Center for Imaging Science (CIS) in Clark Hall. Affiliated with the Whitaker Biomedical Engineering Institute, CIS brings together a diverse group of Hopkins researchers engaged in a broad spectrum of imaging studies, including computer vision, medical imaging and computational anatomy, and target and statistical pattern recognition. Within this interdisciplinary environment, Vidal is taking a fresh look at various problems in dynamic vision, and by extension recognition, perception, and action.

How to Encode a Predator

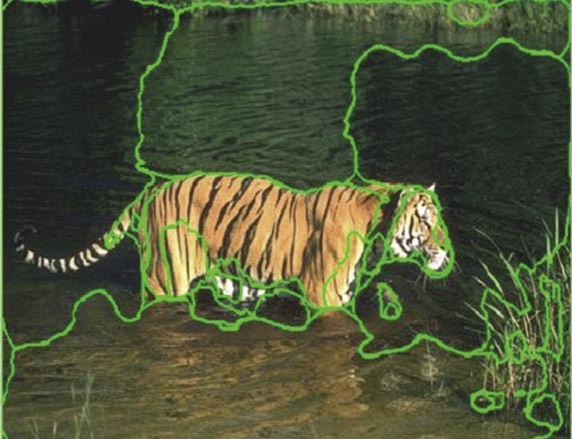

As with all research, Vidal’s study starts with a question: How can a computer visually isolate and recognize a single target or action within a complex and dynamically changing environment? Take, for example, a photograph of a tiger walking through a jungle. Despite the constant shifts in motion, shadow, and light, not to mention the presence of other objects like bushes and branches, the human eye, trained by years of experience, can instantly pick out the animal. However, a computer, which must be programmed in minute detail to complete even the simplest task, cannot distinguish the tiger from its surroundings. “A computer doesn’t know what a tiger is to begin with—it can only relate to defined colors, textures, motions, and shapes,” says Vidal. “So how do you encode these visual cues mathematically and use them to recognize objects and actions? That is significantly more difficult.”

To answer this question, Vidal is developing a mathematically based machine learning technique he calls Generalized Principal Component Analysis, or GPCA. Through an advanced series of mathematical techniques, GPCA can extract a compact representation of visual information automatically (in this case, the tiger) from a larger set of data (the jungle). What’s more, if several representations are extracted, then the computer has a basis for distinguishing one from the others—in short, a platform for autonomous recognition.

Wheeled Robots on the Hunt

Another area of Vidal’s current vision research involves coordinating the actions of multiple agents through the perception and estimation of motion. This involves robotic devices plotting their positions and orientation relative to what they “see,” using both onboard cameras and GPS. In one study, two teams of small wheeled robots actually play a game of “fox and hounds.” The pursuers track the evaders with the help of a small hovering helicopter that is observing the action below. Eventually, Vidal hopes to make the visual interaction of these robots fully automatic, using cameras only. That scenario, he admits, is “very challenging.”

As he explains, “To do that, you need the ability to recognize and track these multiple objects that are in motion, which involves developing algorithms that are provably correct. That is what I have been working on for the last year and a half.”

By integrating these three research areas—recognition, perception, and action—into a single framework with GPCA, Vidal feels that he is on his way to developing machines that can learn from what they see.

Imaging Solutions for Remote Surgery

Beyond robotics and machine learning, Vidal also is exploring additional applications in the field of biomedical engineering. “These same techniques can be applied to the recognition of normal versus abnormal in scanning the human body,” he notes. Such scans could instantly detect physiological abnormalities well in advance of an actual incident, such as a heart attack or other organ failure.

To detect such abnormalities, Vidal and his research team also are conducting studies in spinal cord and heart motion analysis. With the latter, he hopes to produce an imaging solution that will allow a surgeon to operate remotely on a living human heart. “To conduct a remote surgery precisely with a robot, the surgeon would need a video image that looks static, even though the heart is beating,” he says. “By estimating the motion of the heart and compensating for it, we want to present a static image of the tissue being examined.”

For Vidal, our capability as humans to avoid collisions with objects as we walk around is in itself an amazing feat. “We can recognize and interact with the world around us in a remarkably natural fashion,” he says with a smile. “However, all of these things that are simple and automatic to us are actually very difficult to do arithmetically on a computer.”

Discover more about René Vidal’s work at www.cis.jhu.edu/~rvidal/