Small uncrewed aerial vehicles (UAV) are increasingly used for pick-and-place tasks like delivering packages, managing warehouses, and carrying out agricultural duties, such as monitoring crops and livestock. UAVs’ agile flying ability lets them maneuver highly congested airspaces with ease but their small size limits their sensing and computing abilities.

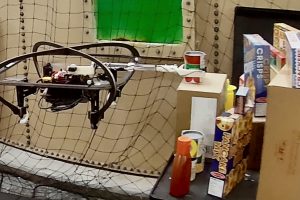

A team from the Autonomous Systems, Control and Optimization (ASCO) Laboratory, led by a researcher at the Johns Hopkins Institute for Assured Autonomy (IAA), has created an affordable, lightweight aerial vehicle research platform for agile object detection and interaction tasks. The team demonstrated detecting, tracking, and grasping objects accurately in complex environments with a 93% success rate. “A Small Form Factor Aerial Research Vehicle for Pick-and-Place Tasks with Onboard Real-Time Object Detection and Visual Odometry” was featured in IEEE’s International Conference on Intelligent Robots and Systems (IROS).

“Our approach will allow UAVs to take on a variety of tasks with increasing accuracy,” said Marin Kobilarov, an associate professor at the Whiting School of Engineering’s Department of Mechanical Engineering who is also a member of the school’s Laboratory for Computational Sensing and Robotics (LCSR). “The platform is not only highly successful at grasping objects, but it is also generalizable for other research tasks in constrained environments.”

The team created its novel platform by equipping an existing quadrotor (a UAV with four rotors) with custom hardware components, including a new protective cage, shock-absorbing feet, and a Gripper Extension Package (GREP), a grasping device. Furthermore, they developed a framework for running computationally intensive algorithms on the small onboard computer, including using a neural network to detect objects based on camera images.

“Research platforms often rely on external sensors and processing which isn’t feasible in real-world applications,” said Cora Dimmig, a doctoral student and major contributor to the project. “We strived to show that entirely onboard computation is possible on a small-form-factor vehicle while maintaining a high level of performance.”

The researchers plan to improve their platform’s ability to interact with the environment by more tightly coupling their control strategy with the perception information collected onboard the vehicle. They believe that this will further improve the accuracy, agility, and applicability of their approach.

They have also made the hardware designs and software framework of the vehicle open source in hopes that other researchers can use it for applications on a wide range of small vehicles.

Co-authors include Dimmig, Anna Goodridge, Gabriel Baraban, Pupei Zhu, and Joyraj Bhowmick—all from the Whiting School of Engineering.

This work was supported by the National Science Foundation.