The world is a noisy place, filled with overlapping sounds that often make it hard to focus. With support from the National Institutes of Health (NIH), Mounya Elhilali is helping us tune in more effectively—not just to conversations but also to the vital sounds used to diagnose disease and the cues that help people with hearing challenges navigate their surroundings.

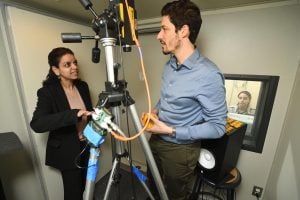

A professor at the Whiting School of Engineering’s Department of Electrical and Computer Engineering, and the founder of the Laboratory for Computational Audio Perception (LCAP), Elhilali is leading efforts to make stethoscopes intelligent, improve speech recognition in noisy environments, and push the boundaries of how technology can enhance human perception.

“With NIH support, we are applying what we learn about how the brain filters sounds in noisy environments to create smarter tools that have a real impact,” said Elhilali.

One major focus of this work has been improving the tools that doctors use to detect respiratory diseases, where quick and accurate diagnoses can mean the difference between life and death for people around the globe.

In 2022, Elhilali and colleagues James West, a professor of electrical and computer engineering, and Eric McCollum, an associate professor of pediatrics at the Johns Hopkins School of Medicine, were awarded a $2.3 million grant from NIH’s National Heart, Lung, and Blood Institute to develop a smart digital stethoscope aimed at revolutionizing pulmonary diagnostics—particularly pediatric diagnostics. More than two million children die every year of acute lower respiratory infections—the leading cause of childhood mortality worldwide.

(L to R) Jiarui Hai, Weizhe Guo, Prof Mounya Elhilali, Alex Gaudio, Nahaleh Fatemi

“Traditional stethoscopes, while widely used, are often unreliable due to interference from noise, the need for expert interpretation, and subjective variability,” she says. “Our research introduces new, advanced listening technology that combines artificial intelligence (AI) with new sensing materials to make diagnoses more accurate.”

This advanced stethoscope uses a special material that can be adjusted to muffle or block out background noises, making lung sounds clearer. It also uses artificial intelligence, including models of the airways and neural networks, to analyze breathing sounds. Elhilali and her team trained their algorithm on over 1,500 patients across Asia and Africa. This technology is currently being used in clinical studies in Bangladesh, Malawi, South Africa, and Guatemala, as well as in the pediatric emergency room at Johns Hopkins Children’s Center.

A version of the device, now called Feelix, has received FDA approval and is being marketed by the Baltimore spinoff Sonavi Labs.

“This technology is already making an impact. It is being used in rural clinics, mobile health units, and large hospitals to assist emergency responders and health care providers around the world to provide rapid, cost-effective pulmonary assessments, especially in areas with limited access to imaging tools like X-rays and ultrasounds,” Elhilali said.

Beyond medical diagnostics, Elhilali’s research explores how the brain processes sound in noisy environments— a phenomenon known as the cocktail party effect. Another NIH-supported project tackled this problem using a new adaptive theory of auditory perception. With support from the National Institute on Aging, Elhilali explored the role of neural plasticity in allowing our brains to adapt to the changing soundscape around us by balancing what our senses hear and what our mental and attentional states are.

“By incorporating insights from brain science, this research examines auditory perception in young and aging brains and has the potential to bridge a gap between traditional hearing aids and truly intelligent auditory devices,” she said.

Elhilali is using data gathered from brain recordings from both humans and animals to understand better how brain circuits can isolate certain important sounds from background noise. In a recent study, “Temporal coherence shapes cortical responses to speech mixtures in a ferret cocktail party,” published in Nature Communications Biology, she and her colleagues discovered that when focusing on a particular speaker, the brain actually synchronizes its activity to match the timing and sound features of that voice, a mechanism known as temporal coherence.

“This discovery provides crucial insights into selective hearing and has significant implications for improving speech recognition technology,” said Elhilali. “By applying these principles, future hearing aids and communication devices could be designed to better filter unwanted noise, benefiting individuals with auditory processing challenges.”