Johns Hopkins electrical and computer engineers are pioneering a new approach to creating neural network chips—neuromorphic accelerators that could power energy-efficient, real-time machine intelligence for next-generation embodied systems like autonomous vehicles and robots.

Electrical and computer engineering graduate student Michael Tomlinson and undergraduate Joe Li—both members of the Andreou Lab—used natural language prompts and ChatGPT4 to produce detailed instructions to build a spiking neural network chip: one that operates much like the human brain. Through step-by-step prompts to ChatGPT4, starting with mimicking a single biological neuron and then linking more to form a network, they generated a full chip design that could be fabricated.

“This is the first AI chip that is designed by a machine using natural language processing. It is similar to us telling the computer ‘Make an AI neural network chip’ and the computer spits out a file used to manufacture the chip,” said Andreas Andreou, professor of electrical and computer engineering, co-founder of the Center for Language and Speech Processing and member of the Kavli Neuroscience Discovery Institute and Johns Hopkins new Data Science and AI Institute.

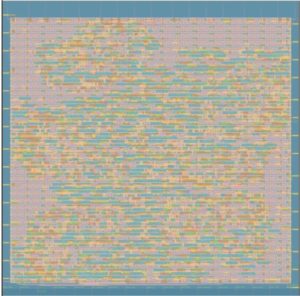

Physical Layout of the spiking neural network chip designed by ChatGPT 4

The work was initiated in the NSF-funded 2023 Neuromorphic Cognition Engineering Workshop held last summer. “Designing Silicon Brains using LLM: Leveraging ChatGPT for Automated Description of a Spiking Neuron Array” appears on the preprint site arXiv.

The chip’s final network architecture is a small silicon brain with two layers of interconnected neurons. The user can adjust the strength of these connections using an 8-bit addressable weight system, allowing the chip to configure learned weights that determine the chip’s functionality and behavior. Reconfiguration and programmability are done using a user-friendly interface called the Standard Peripheral Interface (SPI) sub-system, which is like a remote control. This SPI sub-system was also designed by ChatGPT using natural language prompts.

Tomlinson explained that they designed a simple neural network chip without complex coding as a proof of concept. Before sending the chip for manufacturing, the team performed validation through extensive software simulations to ensure that the final design would work as intended and to allow them to iterate on the design and address any issues.

The final design was submitted electronically to the Skywater “foundry,” a chip fabrication service where it is currently being “printed” using a relatively low-cost 130-nanometer manufacturing CMOS process.

“While this is just a small step towards large-scale automatically synthesized practical hardware AI systems, it demonstrates that AI can be employed to create advanced AI hardware systems that in turn would help accelerate AI technology development and deployment,” said Tomlinson. “Over the last 20 years, the semiconductor industry has made great progress in scaling down the feature size of physical structures on computer chips enabling more complex designs in the same silicon area. The latter advanced computer chips, in turn, support more sophisticated software Computer-Aided Design algorithms and the creation of more advanced computing hardware yielding the exponential growth in computing power that is powering today’s AI revolution.”