Computer vision systems show promise for improving the accuracy of colonoscopy screenings, a procedure that uses a camera to see the inside of the large intestine to detect cancer and other diseases. Training and assessing computer vision algorithms typically require a properly labeled dataset that contains “ground truth”— information that is verified to be true in realistic conditions. To date, ground-truth datasets have been difficult to acquire for colonoscopy images.

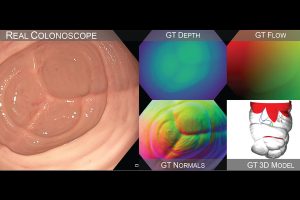

A Johns Hopkins University research team has created a dataset of simulated videos, called C3VD, that is more representative of colonoscopy imaging and can help researchers evaluate how their computer vision models will perform in real-world scenarios. Published recently in Medical Image Analysis, “Colonoscopy 3D video dataset with paired depth from 2D-3D registration” addresses problems that have historically made colonoscopy a challenging application for computer vision, including 3D reconstruction and depth estimation.

According to the team, which includes Hopkins experts in optics, computer science, medical art, and gastroenterology, this is the first colonoscopy reconstruction dataset that includes realistic colonoscopy videos labeled with registered ground truth. They are hopeful that their efforts will contribute to the development of advanced computer vision algorithms that can aid endoscopists in real time during colonoscopies.

“Artificial intelligence can dramatically improve colonoscopy procedures—for instance by improving lesion detection or ensuring the full colon is screened. But AI models rely on high-quality data. Our dataset includes commercial colonoscope videos of realistic colon phantoms, with pixel-level annotations of the distance of every pixel from the camera. Researchers around the world are now using this dataset to train and test the ability of AI to estimate colon geometry directly from a video,” said Nicholas Durr, an associate professor of biomedical engineering and senior author of the paper.

To develop the dataset, researchers from the School of Medicine’s Department of Art as Applied to Medicine first created a digital sculpture of the colon from reference anatomical images and then physically cast it in silicone. Using the 3D colon model, the researchers recorded video sequences with a clinical HD colonoscope to include realistic lighting, sensor gain, gamma, and noise—all factors that play an important role in the performance of computer vision algorithms.

After recording the simulated colonoscopy videos, the researchers needed to virtually align them to the model– a process known as registration–so that they could extract ground-truth information for each frame. They developed a registration algorithm using a combination of artificial intelligence, ray tracing, and optimization. By registering the videos with the models, they created a video dataset with more than 10,000 frames, in which each frame has ground truth normals, optical flow, occlusion, coverage, and poses.

“These are essential components for building reliable computer vision models, making our dataset useful for various potential applications that may improve colonoscopy quality,” said Taylor Bobrow, a graduate student in biomedical engineering and lead author of the paper.

While the study’s primary contribution was the validation dataset, Bobrow says the team also made an interesting finding related to the registration algorithm. Most work on 2D-to-3D image registration has focused on applications in radiological systems via X-rays, whereas the Johns Hopkins researchers worked with optical technologies. They discovered that transforming optical images to the depth domain for registration resulted in significant improvements in registration accuracy, as compared to directly registering the optical images.

The team hopes the open-source dataset will enable the wider research community to improve the performance of computer vision tools for colonoscopies.

Bobrow believes that C3VD has the potential to minimize the number of lesions that routine colonoscopy procedures might miss, resulting in later diagnoses of colorectal cancer.

“A big focus for researchers is on using AI to develop real-time feedback systems that achieve complete visualization of the colon and alert endoscopists if they miss inspecting a tissue area during a procedure. Our dataset could be used as a tool for validating algorithms that flag missed regions,” said Bobrow.

Co-authors include biomedical engineering graduate students Mayank Golhar and Rohan Vijayan; Venkata S. Akshintala, an assistant professor of gastroenterology; and Juan R. Garcia, an associate professor in art as applied to medicine.