The data needed to train AI models to recognize surgical instruments during an operation is typically expensive and time-consuming to collect, limiting the ability of intelligent robotic systems to improve patient outcomes, reduce operation times, and decrease the risk of complications. Now, Johns Hopkins engineers have created a new dataset that shows how one simple change—a few dots of glow-in-the-dark paint—can accelerate this kind of data collection.

In work being presented next week at the 2025 IEEE International Conference on Robotics and Automation in Atlanta, Georgia, researchers from the Laboratory for Computational Sensing and Robotics introduce SurgPose, an open-source surgical video dataset comprising pairs of videos taken of the da Vinci Surgical System in action—one in regular light, one in the dark.

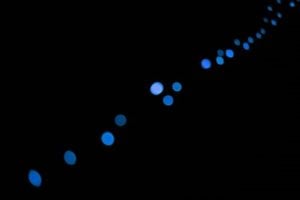

Inspired by work from the University of California, Berkeley and in collaboration with the University of British Columbia (UBC), the researchers marked key points on surgical instruments with ultraviolet-reactive paint, which is invisible to the naked eye but glows under UV light. They then took videos of the da Vinci system executing the same trajectory twice with these instruments—once under UV lighting, once in regular lighting.

“The videos taken in normal light—which look like real surgical scenes—are input into deep learning algorithms, while the videos taken in UV light, where the dots of paint glow to precisely mark the position of each instrument, provide accurate ‘ground truth’ annotations,” explains Haoying “Jack” Zhou, a visiting graduate scholar at LCSR informally advised by Peter Kazanzides, a research professor of computer science and the director of JHU’s Sensing, Manipulation, and Real-Time Systems lab. “In AI, ‘ground truth’ refers to verified reference points, so here, those glowing dots create an automatic labeling system that shows exactly where each surgical tool is positioned, eliminating the need for humans to manually mark thousands of video frames.”

The result is a large, comprehensive dataset that promises to help improve the development and evaluation of AI models capable of understanding surgical scenes—a vital component of robot-assisted surgery.

“Understanding where surgical tools are—and how they move—is crucial for the future of smarter, safer surgeries,” says Zijian Wu, Engr ’23 (MSE), now a doctoral student at UBC and first author on the paper. “There are many exciting downstream applications based on pose estimation, such as augmented reality in surgical training and autonomous robotic surgery.”

However, the researchers note that there are still issues to be addressed, primarily with the diversity of the dataset itself.

“Even though the size of the dataset is much larger than existing ones, its data distribution is still not diverse enough to use SurgPose alone in training a model for use in real clinical applications,” Wu explains. “So we’re looking to combine these real data with synthetic data to train AI systems that are better at handling diverse surgical environments.”

The group also plans to include additional data with more types of instruments, human-demonstrated trajectories of real-life surgical tasks, and in vivo cadaver environments for a more comprehensive evaluation of the dataset.

Still, SurgPose is a valuable resource for testing and comparing pose estimation algorithms under realistic conditions, and researchers in the community can utilize it to develop cutting-edge AI algorithms for surgical instrument tracking in existing endoscopic videos, according to Zhou.

“Our end goal is to achieve a comprehensive benchmark that pushes the surgical robotics field forward and helps develop more intelligent and trustworthy surgical AI,” Wu says.

UBC authors of this work include master’s students Randy Moore and Alexandre Banks, advised by Professor Septimiu E. Salcudean. Intuitive Surgical’s Adam Schmidt, formerly a PhD student at UBC, also contributed to this research.