Johns Hopkins computer scientists have invented a new open-source, AI-powered tool that promises to make rich, customized visual stories like webtoons and informational videos faster and easier for everyone to create, regardless of technical skill.

“Our approach, called ID.8, uses generative AI to help people collaboratively create and customize interactive visual stories,” says lead author Victor Nikhil Antony, a PhD student in the Whiting School of Engineering’s Department of Computer Science, who worked with John C. Malone Assistant Professor Chien-Ming Huang on the project.

Their work, “ID.8: Co-Creating Visual Stories with Generative AI,” appears in ACM Transactions on Interactive Intelligent Systems.

ID.8 works by integrating various generative AI models—which produce text, audio, and images—into a single workflow to make the visual storytelling process accessible to both beginners and experienced creators without requiring specialized artistic or coding skills, the researchers say.

Their goal was to create an ethical, collaborative paradigm that can amplify a broad range of perspectives, help beginners develop skills, and support professional creatives, affirming that AI is an assistive technology that enhances creativity rather than replacing human ingenuity.

“There is a valid fear that AI tools could reduce demand for human artists and writers, but ID.8 is designed as a co-creative agent, emphasizing human control and input,” says Antony. “Such tools can accelerate the brainstorming process, allowing artists to quickly generate rough concepts and explore multiple visual styles before refining their work using their own artistic judgment.”

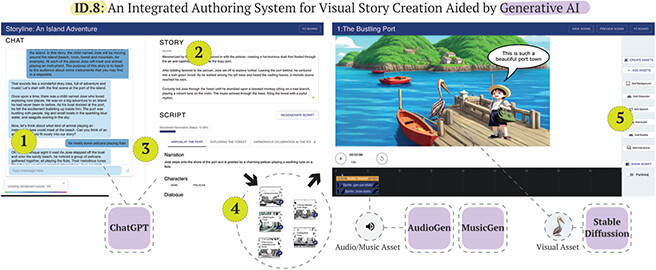

ID.8’s users begin the storytelling process by collaborating with a ChatGPT-powered chatbot to brainstorm ideas and develop a structured script. By posing questions, offering creative suggestions, and generating narrative components based on user input and feedback, the bot allows users to focus on higher-level creative decisions, like plot, character development, and thematic elements. Once the story is outlined, the bot automatically breaks it into a scene-by-scene storyboard for better visualization.

Users can then generate backgrounds, characters, voices, music, and sound effects using various integrated generative AI models. The system also provides tools for editing scenes, adjusting timing, and incorporating interactive elements, like using audience choices to determine the course of the narrative. Once the story is complete, users can preview, edit, and finalize it as a fully interactive visual experience.

ID.8 features a multi-stage visual story authoring workflow facilitated by generative AI. (1) The story creation begins with users collaborating with ChatGPT to create a storyline and (2) manually editing of story content for finer edits. (3) Then, ID.8 automatically parses the story co-created with ChatGPT into a scene-by-scene script to be edited further by the user. (4) The story scenes from the script are automatically prepopulated and organized in the Storyboard and (5) edited in the Scene Editor, where users use StableDiffusion, AudioGen, and MusicGen to generate story elements and synchronize story element on the canvas and the timeline.

To evaluate their system, the researchers conducted two studies on ID.8’s usability and co-creative potential, coming away with valuable findings on how to help users communicate their creative vision to the AI with pre-designed templates and adjustable parameters, implementing parallel processing to reduce asset generation wait times, and ethical concerns about AI-generated content.

The researchers plan to add safeguards such as trigger warnings and automatic content filters to help protect ID.8’s users from any biased or harmful output the models may create, but the researchers acknowledge such measures don’t address the root issue: the biased data that these generative models are trained on.

“Any biased outputs encountered during our study only further highlight the importance of critically examining the datasets that large generative models are being trained on to ensure safe content generation and to support their ethical use,” Antony says.

He and Huang are using their findings on thoughtful interaction design, user guidance, and safeguards to develop design guidelines for future co-creative systems. Plans for ID.8 involve expanding its co-creative paradigm to explore how generative AI can be used to create more expressive robot behaviors.

“By leveraging generative AI for multimodal content creation—text, visuals, audio, and now movement—we aim to develop more engaging and lifelike robotic interactions that feel natural and intuitive,” says Antony. “This could have impactful applications in education, therapy, and entertainment, where robots could become interactive storytellers, companions, or learning aids that adapt their behaviors in real time.”