A bouncing ball that never falls, or a person who seemingly glides across a room without taking a step? These are just some of the oddities that can emerge from today’s most advanced AI video generators. Johns Hopkins researchers have developed a new framework called DiffPhy that corrects these physics-defying glitches by bringing real-world physical laws into AI video generation.

“While recent advances in video diffusion models have made it possible to create compelling visuals from a text prompt, these models often ignore the fundamental rules of motion and interaction,” said co-author Ke Zhang, a PhD student in the Whiting School of Engineering’s Department of Electrical and Computer Engineering. “Objects may float, shift without apparent cause, or collide together in impossible ways. DiffPhy enhances these models by grounding them in physical principles, helping them generate scenes that do not just look real, but move and behave like they actually are.”

How DiffPhy Works

DiffPhy combines the strengths of large language models (LLMs) and video generation systems. Most existing video diffusion models learn physics indirectly by analyzing large amounts of video data. This approach can capture basic motion patterns, but it struggles with complex scenarios involving forces, collisions, or nuanced interactions between objects.

“DiffPhy takes a different approach. It uses LLMs to explicitly reason about the physical context of a given prompt,” said co-author Vishal Patel, an associate professor of electrical and computer engineering and a member of Johns Hopkins’ Data Science and AI Institute. “For example, if the prompt is ‘a box falls off a table,’ the LLM fills in the missing physical details—like the force that caused the fall, how the box should move through the air, and what happens when it hits the ground. This enhanced, physics-aware version of the prompt is then used to guide the video generation process.”

To ensure that the resulting videos reflect both the meaning of the prompt and the laws of physics, DiffPhy introduces a second layer of oversight using a multimodal large language model (MLLM).

“This model serves as an intelligent supervisor, evaluating whether the generated video aligns with the described physical phenomena and makes sense visually,” said co-author Cihan Xiao, a PhD student in the Department of Electrical and Computer Engineering. “It checks not only if the video looks good but also if it behaves in ways that are physically plausible.”

Building a Better Dataset

Training such a system requires a dataset rich in physical diversity, and most available datasets fall short. To address this, the team curated a new dataset called HQ-Phy, containing over 8,000 real-world video clips covering a broad range of physical actions and interactions.

“This dataset allows the model to learn from real examples rather than relying on limited or synthetic footage, which often lacks the complexity of natural motion and force,” said Patel.

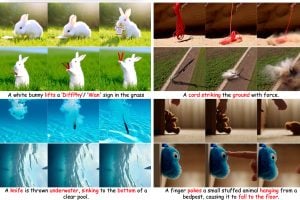

Testing Physical Realism

In testing, DiffPhy outperformed state-of-the-art models on benchmarks designed to evaluate physical realism in video generation. On the VideoPhy2 and PhyGenBench datasets, which include prompts related to everyday physical scenarios, DiffPhy generated videos that more accurately captured how objects and people should move and interact, while also scoring higher on semantic accuracy and physical commonsense according to human evaluators.

“Even without advanced prompting strategies like chain-of-thought reasoning, DiffPhy delivered strong results, improving even further when such techniques were applied, making it especially valuable for applications in simulation, robotics, gaming, and education,” said Zhang.

The paper’s co-authors also include Yiqun Mei and Jiacong Xu, both PhD students in the Department of Computer Science.