AI systems used to identify people in images and video may rely too much on body size, potentially affecting their accuracy and performance, according to a new study by Johns Hopkins researchers. The researchers analyzed person re-identification (ReID) models— widely used in public safety and surveillance applications—and introduced a framework called expressivity to measure how strongly attributes like body mass index (BMI), pose, and gender are reflected in the system’s decision-making. Their results appear on the preprint site arXiv.

Person ReID models are trained to recognize individuals across video frames or camera views by analyzing visual body features. But because they often rely heavily on physical traits that can change, like weight or posture, these models risk making errors, misidentifying people of similar height but different body compositions or not identifying someone if their weight changes.

“In deep learning, what you don’t train for can be just as impactful as what you do,” said co-author Rama Chellappa, Bloomberg Distinguished Professor in electrical and computer engineering and biomedical engineering and Interim Co-director of the Data Science and AI Institute. “Our goal was to measure how much identity recognition models rely on body attributes they weren’t explicitly taught to recognize.”

To do this, the researchers adapted a concept known as expressivity, a statistical method originally developed for face recognition models, to evaluate how body-based ReID systems translate physical features into data for identifying people.

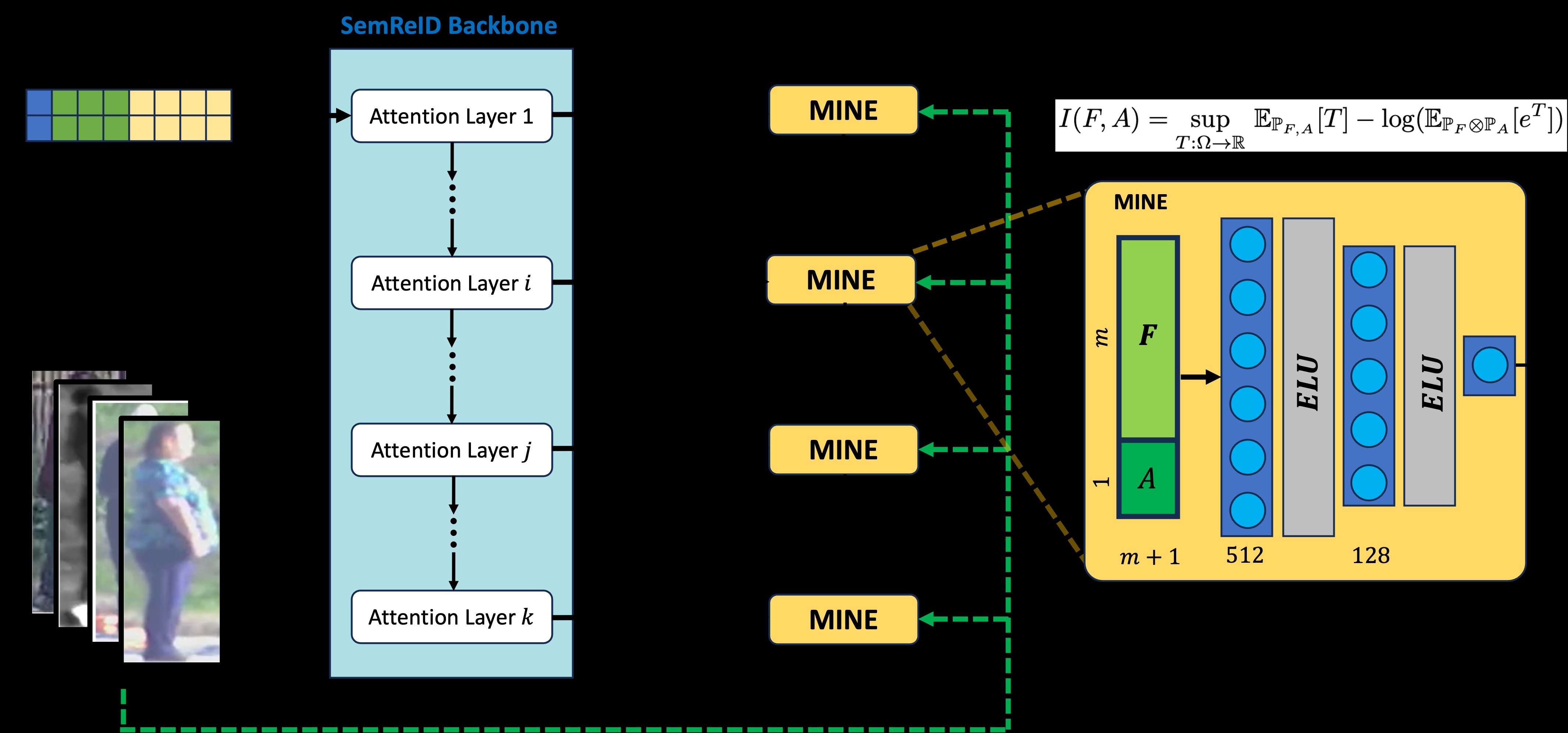

“Expressivity measures how much information about a specific attribute, such as BMI or pose angle, is embedded in the model’s internal feature representations,” said co-author Siyuan Huang, a PhD student in electrical and computer engineering. “We used this method to analyze SemReID, a state-of-the-art vision transformer model for person ReID.”

Their findings were striking: BMI emerged as the most influential factor encoded in the model’s deepest layers, even though it was never an explicit training feature. BMI consistently ranked higher in expressivity than head orientation (pitch and yaw) and gender.

“The model is making identity predictions that rely heavily on body shape,” said co-author Basudha Pal, a PhD student in electrical and computer engineering. “Pose and even gender were secondary.”

While body size is part of how people appear, the researchers note that over-reliance on it can lead to inaccurate results, especially since BMI can fluctuate over time and often varies across different ages, genders, or racial groups.

To trace how these attributes evolved during learning, the researchers examined different parts—called layers—of the transformer model and how it assessed physical features. They found that in early layers, the models focused on pose-related details, like head angle or body position, reflecting the network’s attention to how people are oriented in space. But as training progressed, those pose-related cues became less important, and BMI became the main feature used and considered.

Integrating the MINE block with the ViT based SemReID backbone to compute the expressivity of features with respect to attributes such as BMI, gender, pitch and yaw. The internal structure of the MINE block employs a simple MLP with two hidden layers to compute the expressivity of m-dimensional features F. By augmenting these features with an attribute vector A, the input to the network is extended to (m+1) -dimensions.

In contrast, gender was much less important. While gender expression can play a role in how people are perceived, the researchers caution that even subtle cues can still influence a model’s predictions—especially when training data is not balanced across different groups.

The team said that their expressivity framework offers several advantages over traditional tools used to evaluate AI models. It can handle both fixed traits, like gender, and more variable ones, like BMI or head angle. It also works with pre-trained models, eliminating the need for retraining. This makes it especially valuable for evaluating AI systems already in use in real-world settings.

“This approach helps us not only interpret what these models are learning but also understand the correlations they rely on,” said Pal. “With person ReID being used in sensitive applications, we need tools like this to make sure we’re building systems that are fair and robust.”

The study was supported by the Intelligence Advanced Research Projects Activity (IARPA) as part of the BRIAR project. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies, either expressed or implied, of ODNI, IARPA, or the U.S. Government.