Abstract: Bringing automation and intelligence into robotic surgery holds immense potential to revolutionize healthcare delivery by alleviating physician workload and extending critical treatments to underserved populations. This talk will discuss how modular integration of model-based and learning-based methods enables robots to achieve three core capabilities required for automation in robotic surgery: environment understanding with high precision (sensing), reliable manipulation in medical environments that guarantee patient safety and minimize failures (planning), and continuous medical knowledge accumulation to operate in diverse clinical scenarios (adaptability). I will conclude this talk with promising future directions in autonomous and intelligent surgical robot systems.

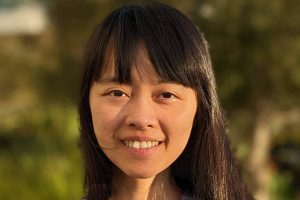

Bio: Zih-Yun “Sarah” Chiu is an assistant professor in the Department of Computer Science and a member of the Johns Hopkins Data Science and AI Institute. Her research goal is to develop the next generation of intelligent robots that can see, plan, move, and learn with the expertise of skilled medical professionals. Chiu’s work focuses on the mathematical modeling of various medical and general knowledge and leveraging these models to empower robots with the ability to operate in complex medical environments precisely, safely, and efficiently. She was recognized as a 2024 EECS Rising Star and a 2025 Robotics: Science and Systems Pioneer. She also received the Best Paper Award in Medical Robotics at the 2023 IEEE International Conference on Robotics and Automation (ICRA).