To analyze the spoken word, linguists break human utterances into their smallest components: phonemes. Computer science professor Gregory Hager uses the word “dexeme” to describe the building blocks of the complex process he investigates: the series of movements a surgeon employs in the operating theater. “They’re simple, one- or two-joint motions,” says the director of the Computational Interaction and Robotics Laboratory, “the roll of a wrist to push in a needle or the straight-line movement to pull a suture.”

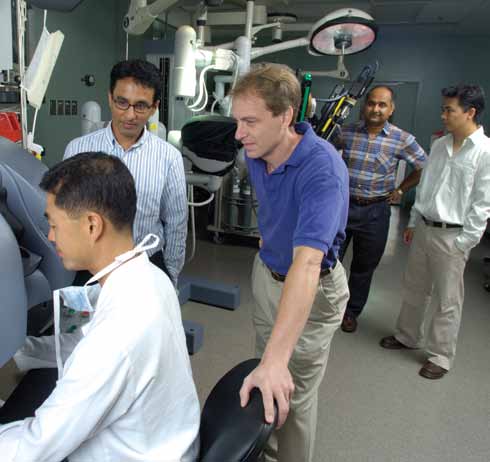

In a project dubbed “The Language of Surgery,” Hager collaborates with a multidisciplinary team of 20 scientists, clinicians, and students from six departments and three divisions across Johns Hopkins, including fellow engineering faculty Sanjeev Khudanpur, Rene Vidal, and Rajesh Kumar, and researchers David Yuh and Grace Chen from the School of Medicine. Together, they investigate the elegant motions of expert surgeons, track the learning curve of accomplished surgeons acquiring new techniques, and analyze the relatively inexpert movements of doctors in training. “Someone who isn’t skilled makes mistakes—mispronounces, if you will,” says Hager, noting that the team intends to deploy their findings to improve both surgeon education and patient outcomes. “We’re trying to understand what it means to mispronounce in the technical realm.”

Linguists rely on voice recordings to make such judgments. Hager and his team will rely on real-time data from training exercises and actual minimally invasive surgeries performed with the da Vinci surgical system. Seated at a console outfitted with a stereo video display and two master arms, a surgeon remotely manipulates the daVinci surgical tools and endoscope to perform minimally invasive procedures—such as prostatectomies and heart valve repairs—in which the relative bulk and limited range of motion of the human wrist would demand larger incisions than the robot requires.

“Once you have the human attached to the robot performing surgery, not only does the device allow them to perform surgery, but we can record exactly what the surgeon did,” says Hager, whose team garnered permission to record both video and motion data from da Vinci systems at teaching hospitals across the country. “This is the first time in history, if you want to be grand about it, where we can get thousands of hours of human motion data on a relatively narrow and well-defined set of manipulation tasks.”

Using data from suturing, dissecting, and tissue joining activities performed with da Vinci, the team has developed computer algorithms to distinguish the grammatical structure underlying each task. In the process, they have documented the eerie similarity in the movements of experts (side-by-side footage reveals nearly synchronized gestures), and have refined the algorithms to distinguish stylistic embellishments—such as an extra needle adjustment habitually inserted in the tightening of a suture—from basic motions. And because the da Vinci recordings include training sessions for both accomplished surgeons new to the robot and relatively unskilled surgical residents inexpert both at the task and the computer-aided technique, findings also include preliminary information on the skill acquisition patterns of experts and novices. Analyses of those patterns could prove vital to contemporary training programs for surgical residents— instruction more important than ever now that medical residents are limited in how many hours they may spend in the hospital each week. “Surgery is still taught the way it was more than 100 years ago,” says Hager, quoting the founding chief of surgery at Hopkins Hospital, William Halsted: “See one, do one, teach one.” With greater understanding of how experts move and the gestures that correspond to optimal healing, Hager and his team hope to do Halsted one better by enhancing the training process. “Ultimately,” he says, “what matters is patient outcomes.”