Create

Design Project Gallery

Smart Skies: Real-Time Gesture Detection in Miniature Drone

- Program: Electrical and Computer Engineering

- Course: EN.520.440/640 Machine Intelligence on Embedded Systems

- Year: 2025

Project Description:

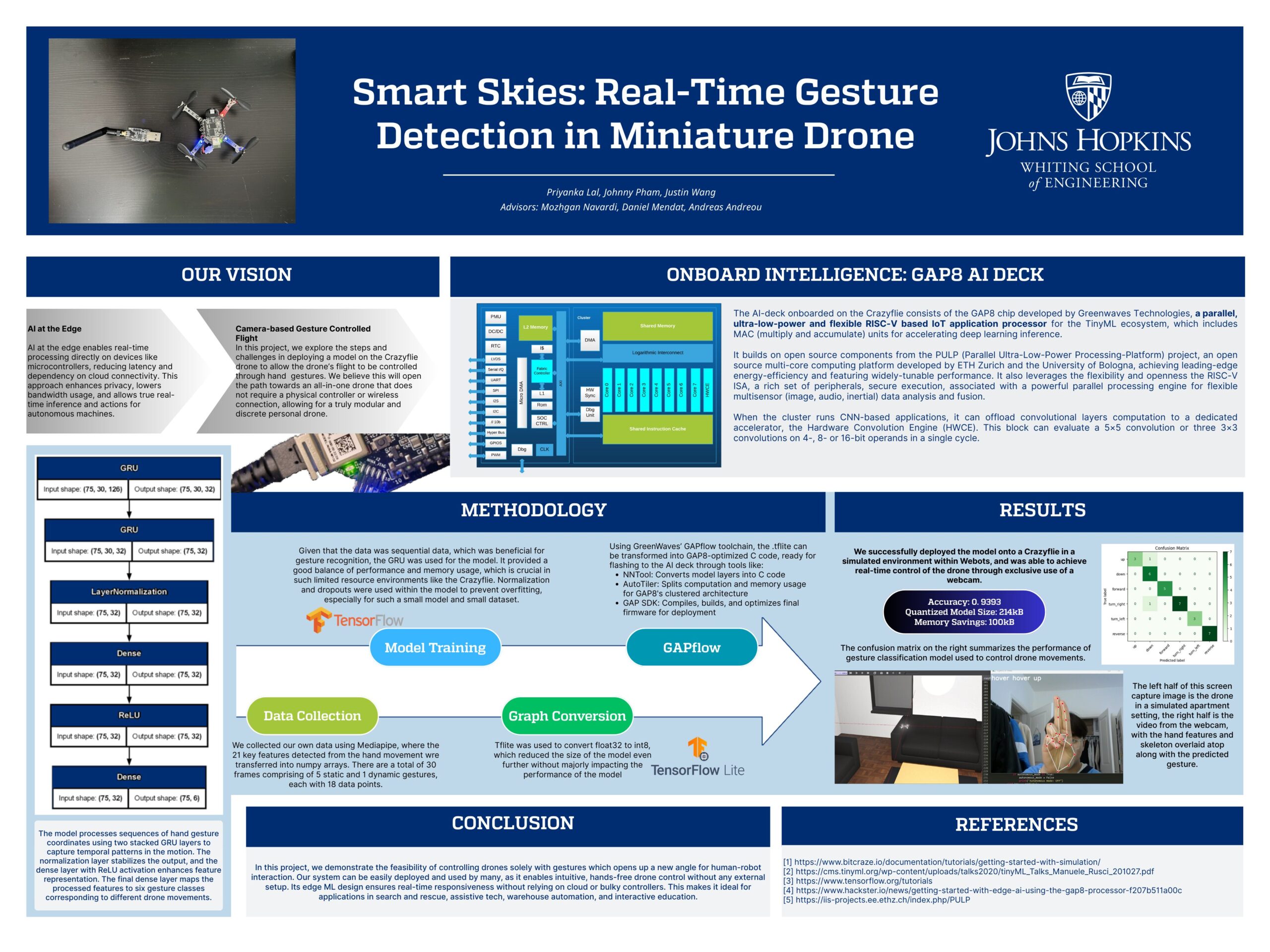

This project focuses on real-time gesture-based motion control of the Crazyflie 2.1 nano quadcopter using a machine learning model deployed on the GAP8 AI deck. The system uses camera-based hand gesture recognition, where x-y coordinates of tracked hand movements are extracted using MediaPipe and processed as input to a lightweight neural network.

The gesture recognition model is trained offline using time-series data of hand positions captured during predefined motions—such as upward swipes for ascending, downward gestures for descending, or lateral movements for turning. This data is used to train a neural network capable of classifying dynamic gestures in real-time. Once trained, the model is quantized and deployed to the ultra-low-power GAP8 processor.

The project demonstrates the feasibility of vision-based embedded ML on resource-constrained platforms. It highlights the integration of real-time gesture classification, camera-based input processing, and embedded deployment using the GAPflow toolchain, showcasing skills in time-series vision data handling, model optimization, and real-time system design for edge AI applications in robotics.