Create

Design Project Gallery

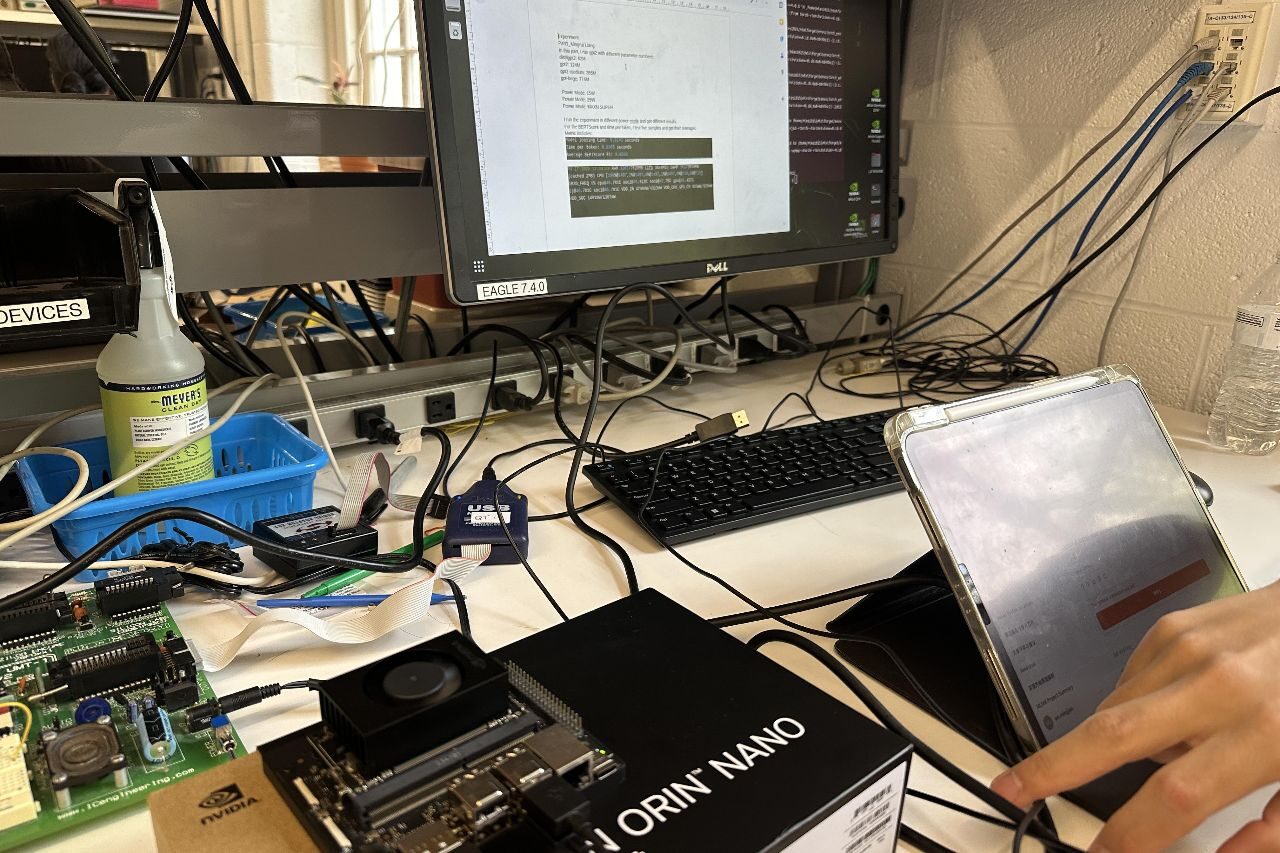

Evaluating and Fine-Tuning Lightweight LLMs on Jetson

- Program: Electrical and Computer Engineering

- Course: EN.520.440/640 Machine Intelligence on Embedded Systems

- Year: 2025

Project Description:

This project investigates the feasibility of running and fine-tuning lightweight Large Language Models (LLMs) on resource-constrained edge devices, specifically the NVIDIA Jetson platform. Our objective is to systematically evaluate multiple small-scale LLMs in terms of their inference accuracy, GPU memory usage, power consumption, and overall computational efficiency. By leveraging built-in monitoring tools and external measurement methods, we aim to provide a comprehensive comparison of model performance and resource demands. Following the evaluation phase, we fine-tune a selected model on a downstream task to assess how well Jetson handles on-device training or adaptation. This study highlights the trade-offs between model performance and hardware limitations, offering insights for real-world deployment of LLMs in low-power environments. The results will help guide model selection and optimization strategies for developers targeting edge AI applications with minimal computational overhead.