Recently, several approaches have been proposed to address various problems encountered in crowd counting. These approaches are essentially based on convolutional neural networks that require large amounts of data to train the network parameters. Considering this, we introduce a new large scale unconstrained crowd counting dataset (JHU-CROWD++) that contains “4,372” images with “1.51 million” annotations. In comparison to existing datasets, the proposed dataset is collected under a variety of diverse scenarios and environmental conditions. Specifically, the dataset includes several images with weather-based degradations and illumination variations, making it a very challenging dataset. Additionally, the dataset consists of a rich set of annotations at both image-level and head-level. Several recent methods are evaluated and compared on this dataset. The dataset can be downloaded from http://www.crowd-counting.com.

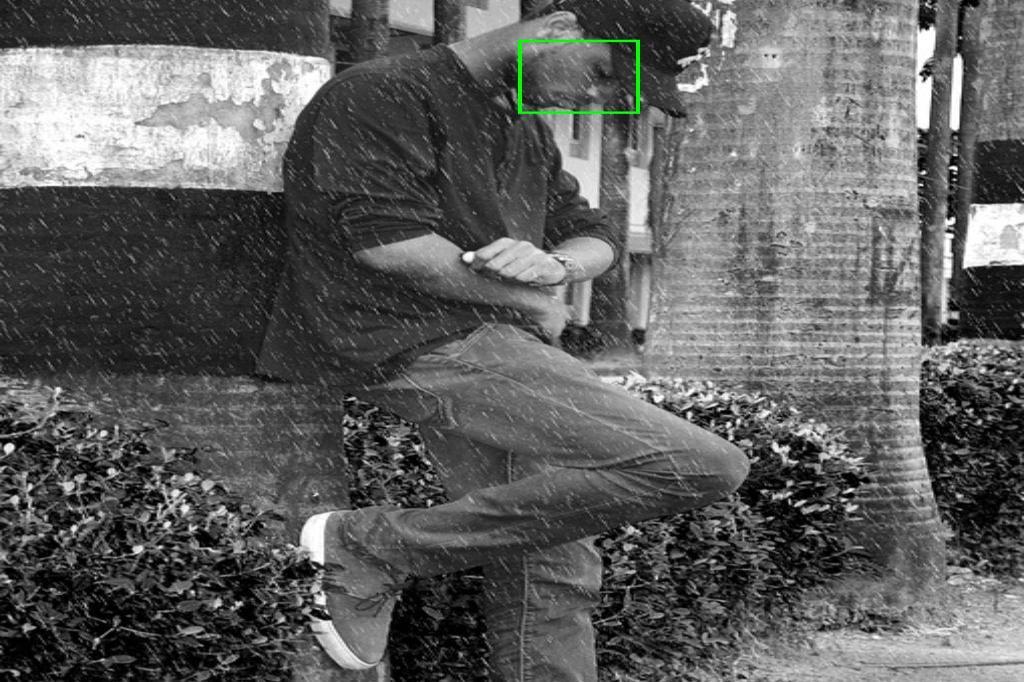

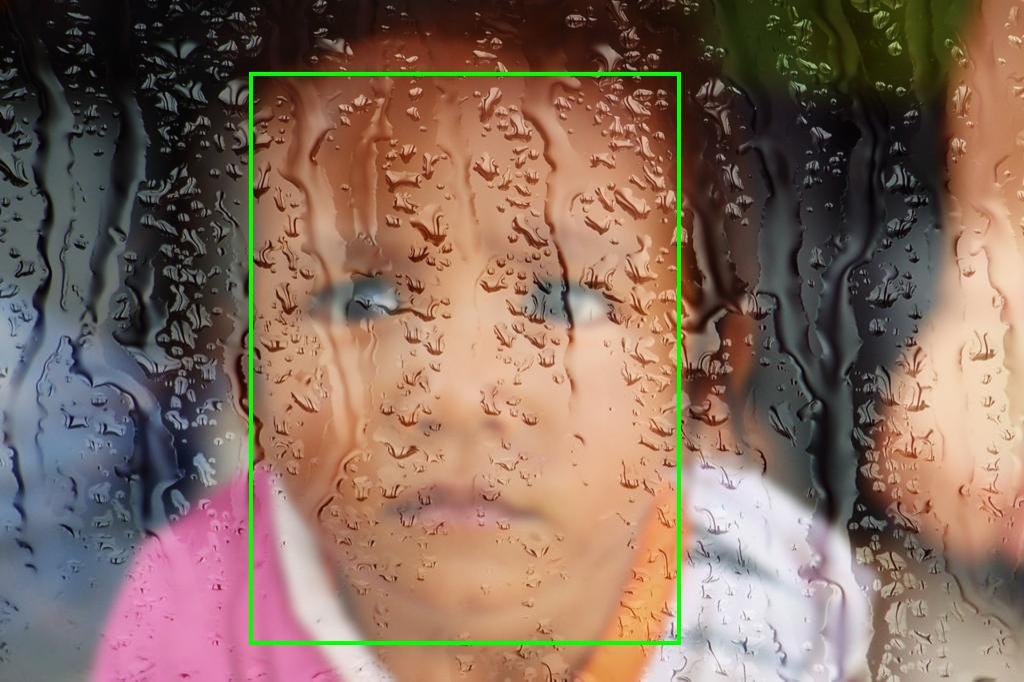

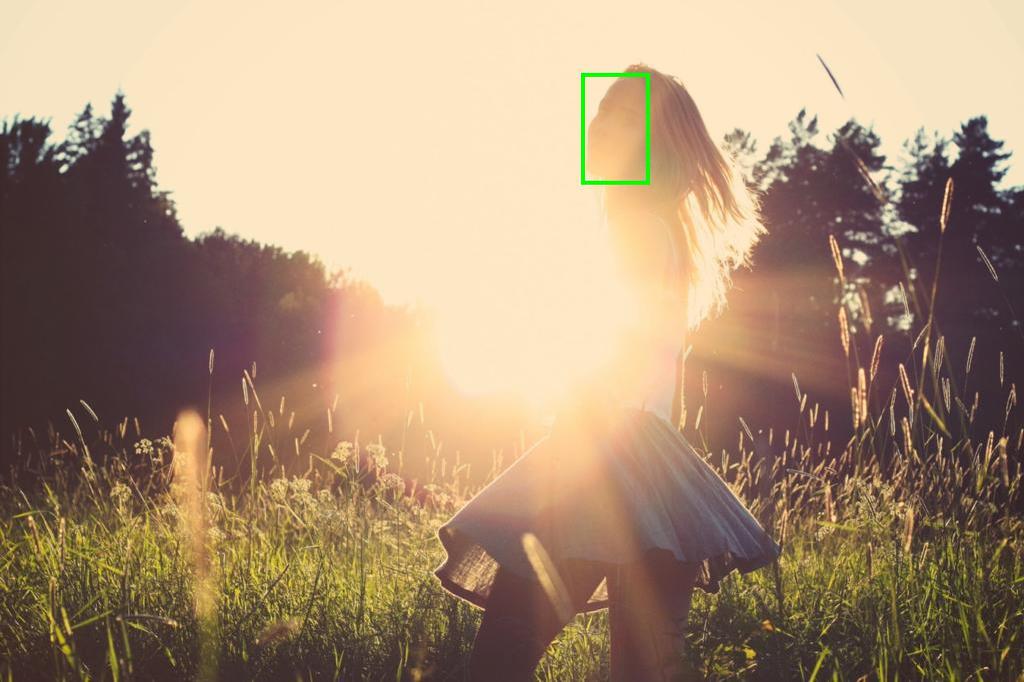

Face detection has witnessed immense progress in the last few years, with new milestones being surpassed every year. While many challenges such as large variations in scale, pose, appearance are successfully addressed, there still exist several issues which are not specifically captured by existing methods or datasets. In this work, we identify the next set of challenges that requires attention from the research community and collect a new dataset of face images that involve these issues such as weather-based degra- dations, motion blur, focus blur and several others. We demonstrate that there is a considerable gap in the performance of state-of-the-art detectors and real-world require- ments. Hence, in an attempt to fuel further research in unconstrained face detection, we present a new annotated Unconstrained Face Detection Dataset (UFDD) with several challenges and benchmark recent methods. Additionally, we provide an in-depth analysis of the results and failure cases of these methods.

Severe weather conditions such as rain and snow adversely affect the visual quality of images captured under such conditions thus rendering them useless for further usage and sharing. In addition, such degraded images drastically affect performance of vision systems. Due to lack of appropriate datasets for training deep networks on this task, we introduce a dataset consisting of pairs of rainy and clean images. The train set consists of a total of 700 real-world clean images, with 500 images chosen randomly from the fist half of UCID dataset and 200 images chosen randomly from BSD-500 train set. The test set consists of a total of 100 images, with 50 images chosen randomly from second half of UCID dataset and the rest 50 chosen randomly from the test set of BSD-500 dataset. We generate the corresponding rainy images by synthesizing rain-streaks of different intensities and orientations.