The answers, my friend, may be blowin’ in the wind—or, for that matter, hidden in the nether regions of the brain. But how to see them? Through tools ranging from toy turbines to a “magic box,” Hopkins engineers are pushing to make visible that which is indiscernible to the naked eye.

Wind Energy

One of the most promising new areas of research in sustainability involves something that can’t be seen or even held. But it can be harnessed. And that’s why Charles Meneveau’s research on wind energy just received a grant from the National Science Foundation (NSF) under their newest funding category, Energy for Sustainability.

“Wind farms are springing up everywhere,” says Meneveau, the Louis M. Sardella Professor in Mechanical Engineering. “Worldwide, about $23 billion was invested in wind farming equipment in 2006 and it’s expected that total installed capacity will double by the end of the decade. The potential is enormous.”

Meneveau estimates that 3 million giant wind turbines operating at an average power generation of 1 megawatt each could enable wind energy to become our country’s only energy source. Spaced every half a mile, the turbines would cover a square measuring approximately 900 miles on each side (e.g., most of the Midwestern states between the Mississippi and the Rockies). “Of course this isn’t going to happen,” he concedes, but, he adds, “the estimate at least gives us a sense of the massive scale of our energy consumption.”

With wind energy on an “upsweep,” Meneveau, post-doc Raul Cal, and co-investigator Luciano Castillo of Rensselaer Polytechnic Institute, propose to use the NSF funding to study the interactions between wind farms and the atmosphere. “Wind turbines extract kinetic energy. They slow the wind down, but perhaps increase turbulence in their wakes; this in turn may affect evaporation from the ground, and, honestly, we’re not sure what else happens when wind farms are implemented on a massive scale,” says Meneveau.

And what Meneveau ultimately plans to produce is only slightly less ethereal than the wind itself: more accurate computer models that could inform us about the relationship between wind and wind turbines— models that would help environmental planners with the placement of turbines to create maximum energy extraction with minimal negative impact on the environment.

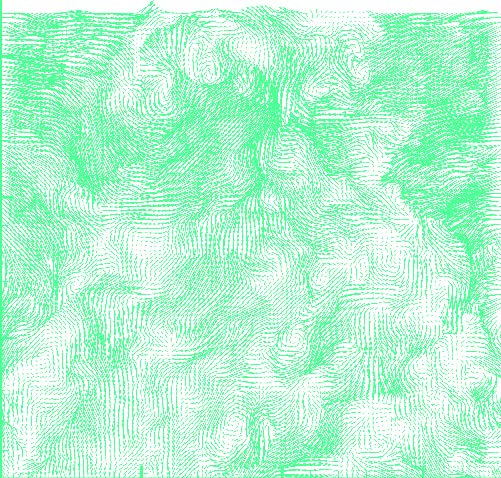

In order to develop these models, Meneveau and collaborators will use “toy” turbines to collect detailed fluid dynamic measurements of the air velocity between and behind turbines. These model turbines, each about five inches tall, are being installed in the Corrsin wind tunnel in Maryland Hall. “We’ll make the invisible turbulent motions of air visible by using advanced laser-based measurement methods. Microscopic particles floating in the air will be illuminated, digitally photographed, and these images can be analyzed computationally. This will enable us to deduce the instantaneous velocity field,” Meneveau explains.

“Wind energy is just one of several options to be developed. If wind farms are to be implemented on a massive scale, we need to improve our tools to predict their interactions with the environment in the lower atmosphere,” he says. “That’s what we’re after.”

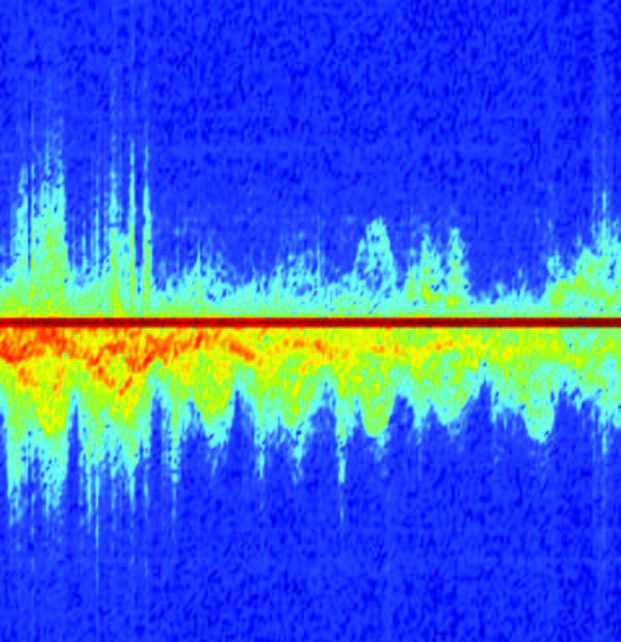

A sample turbulent velocity snapshot (above) made visible in the windtunnel is shown in this vector map. Says Meneveau, “We will study the fluid dynamics and kinetic energy fluxes from such data and develop the computer models that can then be applied to the full-scale wind farms.”

Brain Imaging

Though magnetic resonance imaging (MRI ) technology has made the interior of the brain visible to clinicians and researchers for more than 20 years, there are still parts of the brain “that would never be visible unless we were to cut the brain wide open,” says Bennett Landman, a graduate student in biomedical engineering who also works with Professor Jerry Prince in the Electrical and Computer Engineering lab. “We’re looking at the impact of tiny, subtle changes that occur in these places,” Landman explains.

In particular, Landman and Prince are focusing on the cerebellum, a small structure of the brain involved in motor control, learning, and planning. “The cerebellum isn’t fixed. It’s behind the brain and not very firmly attached, so it moves around a bit,” Landman says. But what it lacks in size and stability, it makes up for in content. “It’s small and densely packed with neurons,” says Landman. In fact, even though the cerebellum comprises just 10 percent of the brain’s total volume, 50 percent of all of the brain’s neurons reside in it.

“We are investigating the cerebellum’s 3-D structure to shed light on how and why some almost imperceptible changes may factor into devastating degenerative diseases,” says Landman, “such as Alzheimer’s disease or spinocerebellar ataxia, a debilitating motor control disease.”

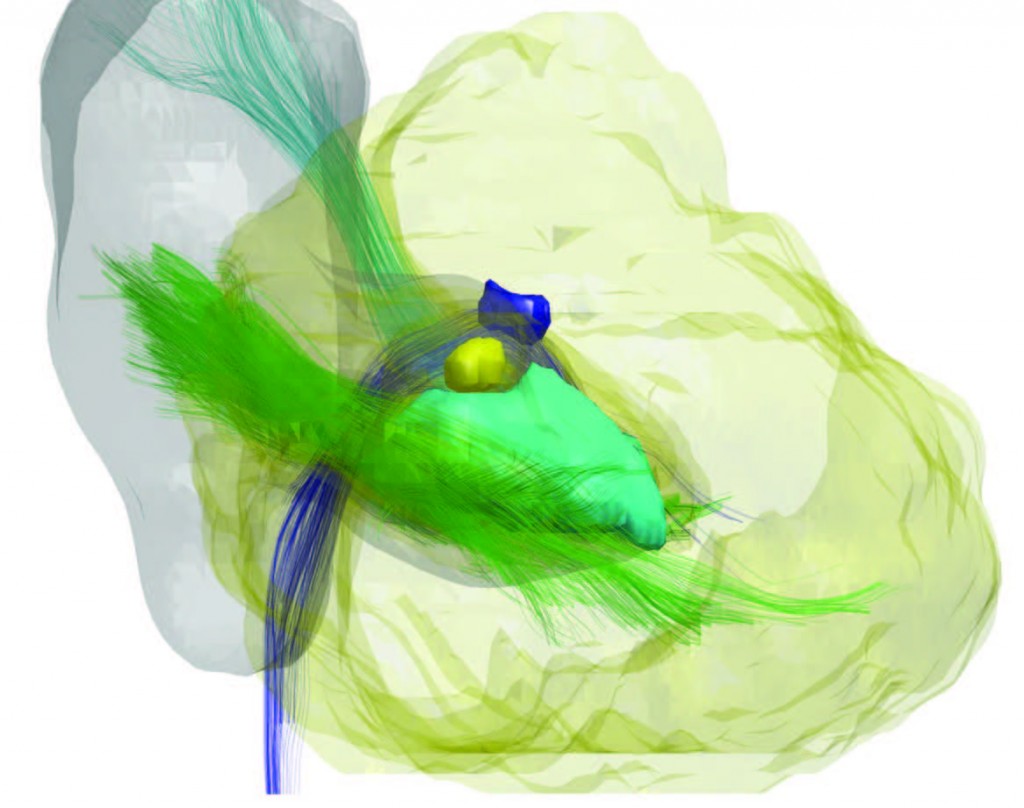

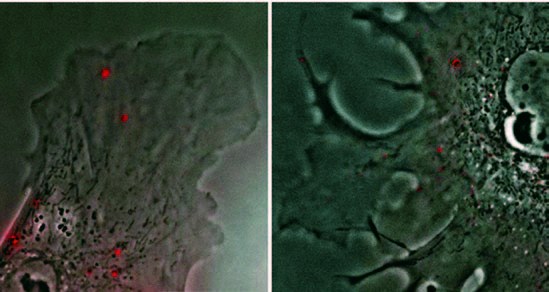

But “seeing” inside the cerebellum is no easy matter. MRI alone can’t do it, because the gray matter of the cerebellar nuclei is too small and of low contrast to show on conventional MRI scans. So Landman and Prince are combining MRI with a technique known as Diffusion Tensor Imaging (DTI), which produces precise images of 3-D structures through repeated MRI scans. Combining the technologies enables them to see the bright fiber tracks of neurons entering the cerebellum and discover any “holes” in these tracks.

“The absence of a signal on DTI along with information on the overall structure from a conventional MRI allows us to infer the whereabouts of the cerebellar nuclei,” Landman explains. “Within a couple of minutes, we can see the major white matter tracks of the cerebellum, its surface features, and how certain nuclei are arranged.” Viewing all of these features at once, something that would have been impossible without these combined technologies, allows Prince and Landman to then analyze their findings with statistical shape models.

“Ultimately,” Landman says, “this breadth of new features will enable us to see changes in these little-understood diseases—changes that can help clinicians with the staging, prognosis, and treatment of patients.”

Subtle Movement

Spend some time in a crowded subway station, watching hundreds of commuters move to and fro, and it becomes nearly impossible to discern one person’s gait from another’s. Unless hampered by a limp or some other defining characteristic, most people appear similar in the way they stride briskly along.

But with today’s heightened security concerns, it’s more important than ever to be able to detect subtle movements that are unique to one person but not visible to the casual observer, notes Andreas Andreou, MS ’82, PhD ’86, professor of electrical and computer engineering.

And Andreou is developing the technology to do just that.

“I’m looking for the magic box,” he explains, as he digs through piles of electrical equipment in his Barton Hall lab. “Well, it’s really an ultrasonic micro-Doppler system.” Locating it, he plugs in a receiver and transmitter. “You can get all the parts at Radio Shack,” he divulges. Then Zhaonian Zhang, one of Andreou’s graduate students, places the box on an overturned plastic storage bin, about six inches off the floor, along a crowded hallway. Andreou, hands in pockets, asks “Are you ready?” Standing squarely in front of the contraption, he then strolls back and forth, four times.

The velocity of the moving object (Andreou) relative to the observer (the “magic box”) is recorded—including the motions of his arms, legs, and torso. As soon as Andreou is done, Zhang, with a few quick taps on a computer keyboard, converts the collected data into a colorful graph that represents Andreou’s signature shuffle. If Zhang were to follow in Andreou’s footsteps, the resulting graph would look entirely different.

With this box, Andreou is searching for the defining characteristics of human gait—information that could ultimately help differentiate, for instance, between a man walking slowly because he has an injury and someone walking slowly because he’s otherwise encumbered, perhaps by a bomb-laden backpack.

“Are they in a hurry? Hurt? Carrying a concealed weapon?” Andreou asks. “With this technology we will be able to dissect the language of body movement.”

Tracking Nanoparticles

He’s not using smoke and mirrors, but it sounds mighty close. Professor Denis Wirtz, associate director of the Institute for NanoBioTechnology (INBT), uses light to view and manipulate nanoparticles—particles that are onebillionth of a meter in size and 1,000 times smaller than what can be viewed under a conventional microscope.

The exploitation of these ultra-minute particles, Wirtz believes, may hold the key to our understanding of, and treatment for, a wide range of diseases.

“It’s very simple. We excite them with fluorescent light—very, very intense red, yellow, and green light,” he explains. Illuminating the nanoparticles so intensely creates a halo of light, which serves as a sort of proxy for the particles. “By making them visible,” Wirtz says, “We can then track them, one at a time. We can, for example, put them in cancer cells and follow the process of metastasis. We gain a better understanding of how cancer spreads.”

At the INBT, Wirtz and his colleagues are manipulating the illuminated nanoparticles to interact with proteins to determine if it’s possible to modify the behavior of cells. “We can watch them and see that they’re very dynamic and only inhabit a tiny part of the cell. It’s only now, through this process, that we’re beginning to understand that all proteins in a single cell don’t behave the same way.”

Through tracking nanoparticles, Wirtz and his colleagues are gaining new understanding of progeria, a fatal disease that causes accelerated aging in children. While researchers had long thought the disease’s progress was like normal aging, just much faster, Wirtz and his colleagues are finding differently. “We use latex beads, 100 nanometers in size, and we ballistically bombard the cells of a person with progeria with these beads,” he says. “The beads lodge themselves inside the cells and then we track them. Because they’ve been illuminated, we can see their displacement with exquisite resolution.”

Wirtz has demonstrated that the cells from people with progeria are brittle and soft. “They behave more like liquid than healthy cells, which are stiff and stretchy,” he explains. He has also observed the disruption of the cytoskeleton from the nucleus in progeria cells. This disruption leads to cells not being able to move as fast and they lose their sense of direction. “We used to believe that progeria was a defect of the nucleus, but now we believe it’s a defect of the cytoskeleton,” he says.

Insights gained through illuminated nanoparticles, Wirtz states, could ultimately change the treatment of many diseases. “We’re working on ways to rescue defective cells,” he adds.

Seeing the Bay’s Future Through Its Past

In order to predict the future of the Chesapeake Bay, professor and paleobotanist Grace Brush looks back 14,000 years and studies a half-meter-long tube of mud. To the untrained eye, the future doesn’t seem very promising, nor does the past appear particularly scenic. In fact, it all looks like varying shades of dark brown sludge.

For Brush, the dark brown sludge gives plenty of cause for concern.

“Back in the 1970s, people became concerned about the Bay’s future because of declining fish populations and the disappearance of underwater grasses,” she explains. At that time, the Environmental Protection Agency (EPA) funded a group of engineers and scientists to quantify and define the reasons for those changes. “I attended the meetings and was amazed that nobody wanted to examine the past. I wanted to know if we were looking at a ‘boom and bust’ trend, or if these declines were more recent and unique.”

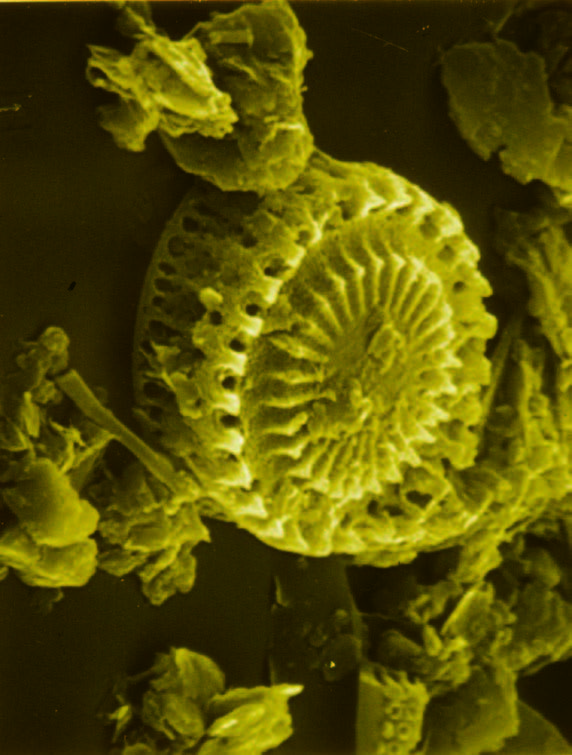

With some of the EPA funding, Brush took core samples from the Bay, including sediment from approximately 12,000 B.C. to the present. “Looking at these samples under a microscope, we found that the appearance of ragweed pollen increased dramatically in the 1700s, at the time of European settlement, a result of the land surrounding the Bay being cleared for agriculture,” she explains. “By the late 1800s, 80 percent of the Chesapeake watershed was deforested and the effects on the Bay had become obvious in the sediment cores.”

As Brush and her students studied the core samples, they discovered that in addition to the increase in ragweed pollen, dramatic changes had also occurred in the Bay’s algae—an important marker for the Bay’s overall health.

“Over the centuries, as the Bay’s water became more turbid from runoff, there was not enough light for the diatoms [a major form of algae found in sediment] to be able to photosynthesize and produce oxygen.” Much of the oxygen that was available was used up by the decomposition of dead plants and animals. “Eventually,” says Brush, “oxygen became too scarce for many bottom-dwelling animals to survive.”

“What amazed me was that everyone knew the Bay was filling up with sediment,” Brush concludes. “They had begun dredging in the 1800s. People knew there was sediment coming in” —but the major concern was economic, since sedimentation threatened to interfere with the transport of goods and people. “Only within the past few decades,” says Brush, “have people come to understand that the basic problem is ecological.”

Information Flow

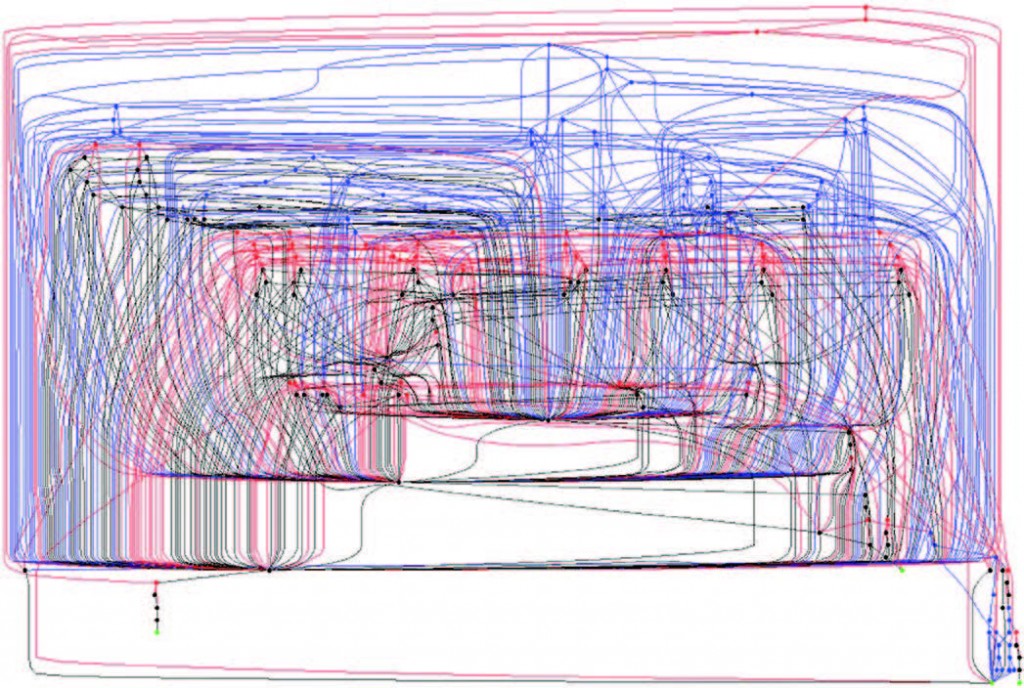

“Within every computer is an incredibly complicated piping system through which information flows like water,” explains Scott Smith, chair of the Department of Computer Science. “And what’s streaming through here,” he says, pointing to the Mac on his desk, “is more complex than New York City’s plumbing system.”

As all this data rushes about, says Smith, it encounters an intricate series of forks, merges, and switches. And at each one of these decision points in the data’s path, one of two things can happen: either the information is directed where it needs to go or the data is diverted to where it should not. When the latter occurs, the integrity of the entire system is compromised. “When pollution enters our water supply system, we want to know which consumers will be affected,” Smith says. “And this is the same kind of tracking and integrity we’re studying in computers. For instance, an online bank should be able to tell customers their own balances, but not the balances of other customers. We want to make sure information goes where it should, but only where it should. Safety is our goal.”

In order to do this, Smith, along with graduate student Mark Thober, looks at code—very, very closely. The two develop algorithms that automatically follow the data flow and determine where exactly it branches out and which output locations could affect their data. “We’re very conservative in our approach,” Smith says, “But this is because if there’s truly the potential for something bad to happen, we want to be able to warn programmers.” The other reason Smith and Thober track their data so carefully as it courses through the computer’s innards is related to security. “We don’t want corrupted data seeping in, but we also don’t want secure data leaking out due to badly written code. Essentially, we don’t want anyone drinking polluted water.”